Data science: From classroom to industry

L IV (1936) by László Moholy-Nagy, via Kunstmuseum Basel.

I recently gave a brief informal talk to a group of students and alumni at the NYU Center for Data Science, where I did my masters in 2019–2021. Alumni were invited to speak on data science in industry, and I focused my talk on the ways that data science in industry differs from data science in the classroom. So far as I could tell, the presentation was well received, so I’ve (loosely) adapted it into a blog post in the hopes that it might be useful to others interesting in transitioning into a job “doing data science.”

Disclaimer: While the content of this post is drawn from my work experience, the opinions throughout are mine alone and not those of my present or past employers.

When I studied math in undergrad, “data science” as a field of study—or a profession—didn’t quite exist yet. Much of what I know about data science in the abstract, I learned while doing my masters in courses on machine learning, statistics, optimization, and ethics. But most of what I’ve learned about being a data scientist has come from hard-won lessons on the job. This post aims to help fill in some of the gap between what you learn about data science in a textbook and what it takes to be an effective data professional in industry.

Some of these insights, though not all, are particular to the subset of the industry which I’ll call “medium-sized consumer-facing tech” companies where I’ve spent my short career thus far. These are companies with a few hundred employees that build apps and services for mass consumption (rather than business-to-business or research lab settings). Even if that’s not the type of place you work (or want to work) at, I suspect you’ll still find the majority of this post valuable.

It’s worth noting at the outset that working in data science and adjacent functions at companies like these can mean a lot of different things. There are data analyst, business intelligence analyst, data engineer, data scientist, machine learning engineer, machine learning platform engineer, and machine learning researcher roles, plus probably a few I haven’t heard of and a few more that haven’t been invented yet. People in these roles may work with different technologies on different problems and interface with different teams.

I won’t belabor the sometimes subtle differences between the roles because there are plenty of good resources on that topic, and because they can vary wildly from company to company or even quarter to quarter as teams get renamed. Best practice would be to focus less on the title and more on the day to day of a particular role. Assume that the coolest 10-25% of the job description is purely aspirational stuff that the company isn’t doing yet—does the role still appeal to you? (If it does, then you should apply!) Supplement that by asking the hiring manager about the specific problems people currently in that role are solving.

The whole job in one diagram

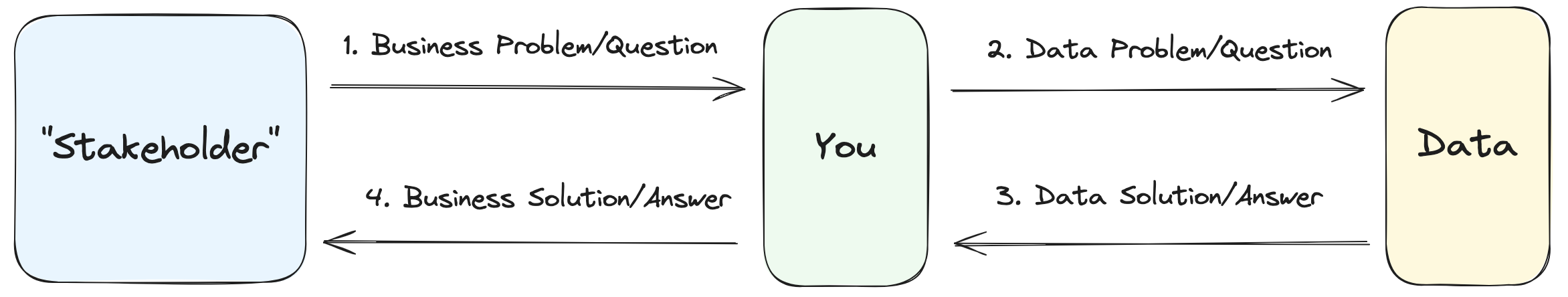

The other reason I won’t split hairs over the differences in different data role details is because they all tend to abide by the diagram below. Whether your business card says “BI ninja” or “ML wizard,” your jobs roughly consists of the following:

The entire career of a data professional is contained in this diagram.

- A business stakeholder comes to you with a problem.1 Maybe they want to figure out how many users were affected by a bug, which of the three features launched in the last quarter is having the greatest impact on a metric of interest, or which users to offer discounts to.

- You take the problem and reframe it as a data problem. Finding out how many users were affected by a bug might be just a SQL query away, while figuring out the most impactful feature could require some causal analysis, and predicting which users to offer discounts could require an uplift analysis.

- Now that you’ve reframed the business problem as a data problem, you do your best to solve that data problem and suss out the results, still in the raw language of the data.

- Lastly, you bring it all home by reframing one more time, taking the answer as it appears in your analysis and translating it back into the language of the business and returning to the context of the original framing. That causal analysis you did on which feature had the biggest impact spat out a whole bunch of coefficients, p-values, and confidence intervals in the last step—this is where you condense that nuance down back into a one-pager that doesn’t require a math degree to understand.

What the diagram (hopefully) makes evident is that you are a conduit between the business goals and some mysterious data, and it’s your job to dive into that data breach and pull something out that you can proudly present to solve a problem. The other thing the diagram shows is that there are really two separate sets of skills needed here: steps 2 and 3 are what we often think of when we think of “doing data science,” the part where you’re hunched over your laptop deep in a query or debugging a model. But it’s crucial not to forget steps 1 and 4—the part where you need listen to and speak with other human beings. Let’s dive into that part first.

The soft skills (which are actually hard)

Your communication skills form the bookends of the actual data work you do to accomplish a task. At the offset, you need to understand what’s being asked of you—what does your stakeholder want? At the end, you need to explain what it is you’ve done.

When it comes to understanding others, it’s valuable to ensure that you’re operating at the correct level of detail. If someone comes to you with a highly specific question—how many users interacted with button X on iOS or button Y followed by button Z on Android within the last N days?—it’s often helpful to pause and zoom out for a second. Yes, you could certainly pull that number, but you wouldn’t be in the position to confidently say that you’ve answered the underlying problem because you don’t know what they’re really asking about.

Instead, ask “what is the context here?” They might follow up with “I’m trying to figure out how many users were affected by bug A, which causes symptom B.” Maybe there’s actually a really easy way to find everyone who experienced symptom B, which is not only easier to query for, but reveals that there were users affected by the bug that wouldn’t have been captured by a literal interpretation of the original request. In this case, zooming out lets us see the full picture. The stakeholder was trying to make your job easier, attempting to do a little of that translation from business problem to data problem, but there is always room for error in that translation, so it helps to “trust but verify.”

In other cases the request may have too little detail and it’s your responsibility to fill in the gaps. If someone asks you to simply “predict which users are likely to churn,” you need to find out what time horizon you’re predicting over, how much inactivity constitutes a churn, what the threshold for “likely to churn” is, and probably a dozen other questions. In other words, you need to gather requirements. Your stakeholder will be able to fill in some of these for you, while others you may have to decide on your own and simply document the decision you made (and why).

Das Park-Konzert (circa 1908) by Ernst Ludwig Kirchner, via Ketterer Kunst.

Once you’ve done all your fancy data work, it’s time to communicate back what you’ve done and this time you’re the one who needs to be understood. It can be natural for data folks to want to include every bit of detail and nuance available: every assumption, caveat, p-value, confidence interval, and edge case. That’s all great stuff, and it will be invaluable in helping your peers review your work, but most of it should be relegated to the appendix of your final deliverable, if it’s included at all. This may be a controversial take, but at the end of the day it’s not your product manager’s job to interpret p-values, or your director’s job to understand the nuance of a causal model—that’s your job, and it’s also your job to communicate it to the statistical layperson.

There is no silver bullet here. In fact, there are a lot of ways for things to go wrong. Tossing away the nuance and caveats is exactly how statistics end up getting misused and misunderstood, and you may even face pressure at times to provide the interpretation that is most desirable to your stakeholder. On the other hand, if you try to shove a full dissertation into your report, no one is going to understand it (if they even try to read it at all).2

Instead, try to delineate the conclusions that your work does support, while also highlighting the (possibly tempting) claims that it doesn’t support. Your stakeholders won’t always do what you felt the data was telling them to do—a frustrating lesson to learn the first few times—but so long as you’ve provided them with accurate and complete information, you’ve done your job.

Practicing these soft skills is hard, but they’ll pay dividends in just about any role (not to mention in interviews).3 Unfortunately, they’re just one piece of the puzzle.

The hard skills (which are also hard)

Detail of a plate from The Mineral Kingdom (1859) by Johann Gottlob von Kurr, via the Science History Museum.

If this post is about the things that make up data science work that you don’t learn in a course, then why do we need to talk about hard skills? Aren’t those the skills you do learn in a course? The answer is yes, sort of, but also no.

Yes, you’ll learn about cross-validation, gradient descent, activation functions, and all that fun stuff in your books and classes, but the every day work of a data professional contains about a million other technical tasks that are just as important. A textbook is great for teaching you the things that never change—the formulas and high-level concepts—but they tend not to focus on the associated work that’s needed to operationalize that knowledge.4

A good chunk of your time is going to be spent on technical work that’s not the technical work you’ve studied, things like: experimental design, version control annoyances, dealing with really big datasets, job scheduling and orchestration, dependency hell, code review (giving and receiving it), defining and QAing metrics, and a whole lot more. You will likely need to learn at least the basics of command line tools, containers, cloud computing services, testing frameworks, and CI/CD frameworks.5

Furthermore, the above work is going to require you to become acquainted with tools you may not have encountered in your studies, for example:

- dashboarding: Tableau and Looker

- data warehouses: Snowflake, BigQuery, and Redshift

- cloud offerings: EC2, S3, Lambda, SageMaker, ECS and EKS, or their Google/Azure equivalents

- MLOps: Comet, WandB, Neptune

- distributed computing: Spark, Flink, Ray, and Dask

- infrastructure, automation, and observability tools: CircleCI, Kubernetes, Prometheus

Maybe you’re looking at this list and thinking, “Why would I need to know about dashboarding tools? I’m a machine learning engineer—I build models, not dashboards!” If you’re at a sufficiently big company, it’s possible that you’ll be specialized enough to only the technologies particular to your niche, but it will certainly be helpful to at least be familiar with them so you can communicate with the people that do use them. On the other hand, at most small and medium-sized companies, there’s typically enough (human) resource contention that you’re likely going to have to occasionally put on your BI hat and build your own dashboard. Embrace the opportunity to be a bit of a generalist: the ability to unblock yourself will come in handy over and over again.

You don’t need to be an expert in all or even any of these tools, but you should at least be generally familiar with the ones relevant to your role—i.e. you know what problem they’re meant to solve, even if you’ve never touched them in your life. The point of this section is not to encourage you to look at the list of tools above as a list of things you need to learn right now in order to get a job.6 (These are tools that early-career applicants typically won’t be expected to know because you’re not likely to have encountered them in a classroom or even in a personal project.) But if you find out on the first day of your job that you’re going to be using Tool XYZ, you should be able to learn about it. Can you go the homepage and find the “getting started” docs for the SDK in your language of choice? Can you find the API reference to help you understand an unfamiliar function in your codebase? Pick one of the above at random and give it a shot.

To round out this section, I’ll note that the tools you use are not the only change you’ll need to prepare for as you enter industry:

- working in one big Jupyter notebook → working in a shared codebase with pull requests, code review, and merge conflicts

- single scripts that run in one environment → modular packages with managed dependencies

- tests? → tests

- evaluation is offline → evaluation is offline and online

- one metric to optimize → multiple potentially contradictory metrics to optimize

- you own all of the code → your code has hand-offs with systems owned by others

- your dataset fits in memory on your laptop → your dataset doesn’t even fit in your laptop hard drive

- no restrictions on data usage → privacy and data retention compliance needs

- code runs on your machine → code is deployed onto live services which must be monitored, maintained, and scaled

Again, you don’t need to master all of the above ahead of time because these are things you will get to learn on the job. I bring them up to highlight that you should expect things to work a little differently in a new job than they do even in your most polished personal project. Keep an open mind to the fact that a little bit of process (and bureaucracy) can go a long way toward keeping data and software projects manageable at industrial scale.

Closing thoughts

The Rock in the Pond (c. 1903) by Joaquim Mir, via the Google Arts and Culture.

I’ve highlighted a number of things that are different between industry and the classroom, but it’s worth noting a few important things that are the same. Whether you’re a student or a professional—at any point in your career—you should:

- look things up and read the docs

- ask other people for help

- be open-minded and humble

- keep learning constantly

This last one is the most important, and there is really no wrong way to do it. Read books and blog posts, attend conferences and meet-ups, talk to people about what they’re working on, contribute to open source, build side projects for fun, watch YouTube videos, get involved with data science communities. Share what you learn with others: write blog posts and make YouTube videos, publish your own open source libraries. Find out what resources your company offers for learning and development and take advantage of them.

On the other hand, data science does not need to be your life. Programmers are often expected to write code for fun on their own time—and many do—but be sure to leave room in your life for the things that bring you excitement and fulfillment outside of your career. Take language lessons, go bowling, see a show, spend time with friends and family. You can always read that blog post about “data science in industry” some other time.

Resources

Interested in reading more about data science (and software engineering) in industry but not sure where to find it? Look no further.

On soft skills: Building a Career in Software by Daniel Heller, 7 types of difficult coworkers and how to deal with them by Jordan Cutler and Raviraj Achar, Traits I Value by Andrew Bosworth, Letters to a Young Cog by Claire Stapleton, The Two Healthbar Theory of Burnout by Jeff Dwyer, Addy Osmani’s blog and Ethan Rosenthal’s blog.

Industry blogs: Airbnb, Doordash, Dropbox, Etsy, Github, Meta , Netflix, Pinterest, Spotify engineering and research, Stripe, Yelp.

Blogs: Eugene Yan, Francois Chollet, Xavier Amatriain, Lilian Weng, Vicki Boykis, Simon Willison, Julia Evans, and Sebastian Raschka.

Other websites: ML System Design resources, Applying ML, Made with ML, and Yannic Kilcher’s YouTube channel.

Communities: ML Collective, MLOps Discord, MLUX, Full Stack Deep Learning Slack, MLOps Community, and Locally Optimistic.

-

Eventually, once you’ve developed a strong sense of the business, you may begin to proactively propose projects in response to business problems you’ve detected, or in anticipation of likely future concerns. The key here is that your proposals should always be rooted in a legitimate problem for the business. It’s great if you can also make it an opportunity to learn something or try a new method/technology, but your primary objective is to serve the interests of the business. ↑

-

Lakshmanan, Robinsonn, and Munn have a good line on this topic in their book Machine Learning Design Patterns: “Model performance is typically stated in terms of cold, hard numbers that are difficult for end users to put into context. Explaining the formula for MAP, MAE, and so on does not provide the intuition that business decision makers are asking for.” ↑

-

Communication is not the only soft skill you should invest in. Many professional (non-technical) skills are covered in Daniel Heller’s excellent Building a Career in Software. For an even shorter take, there’s Jacob Kaplan-Moss, who writes that developers have two jobs: 1) write good code, and 2) be easy to work with. ↑

-

Of course, there are exceptions and you can find plenty of great resources out there on these topics like Chip Huyen’s Designing Machine Learning Systems and the aforementioned Machine Learning Design Patterns (see footnote 1, above). However, the literature and especially the academic coursework on theory tends to vastly outnumber the material on operations. ↑

-

A popular resource on command line tools is “The Missing Semester of Your CS Education” from MIT, which features lectures on a bunch of common utilities you’re likely to need at one point or another. If you’re in a hurry, ChatGPT comes in handy for generating quickie one-liners for “AWS CLI command to get the total file size for all files in an S3 bucket.” ↑

-

The only technology I’d really encourage someone to learn proactively as part of a data career search is SQL. It’s ubiquitous, it’s straightforward, you’re likely to use it every day, and you can learn it in a few weekends. ↑