Ethical AI in The New Yorker (and elsewhere)

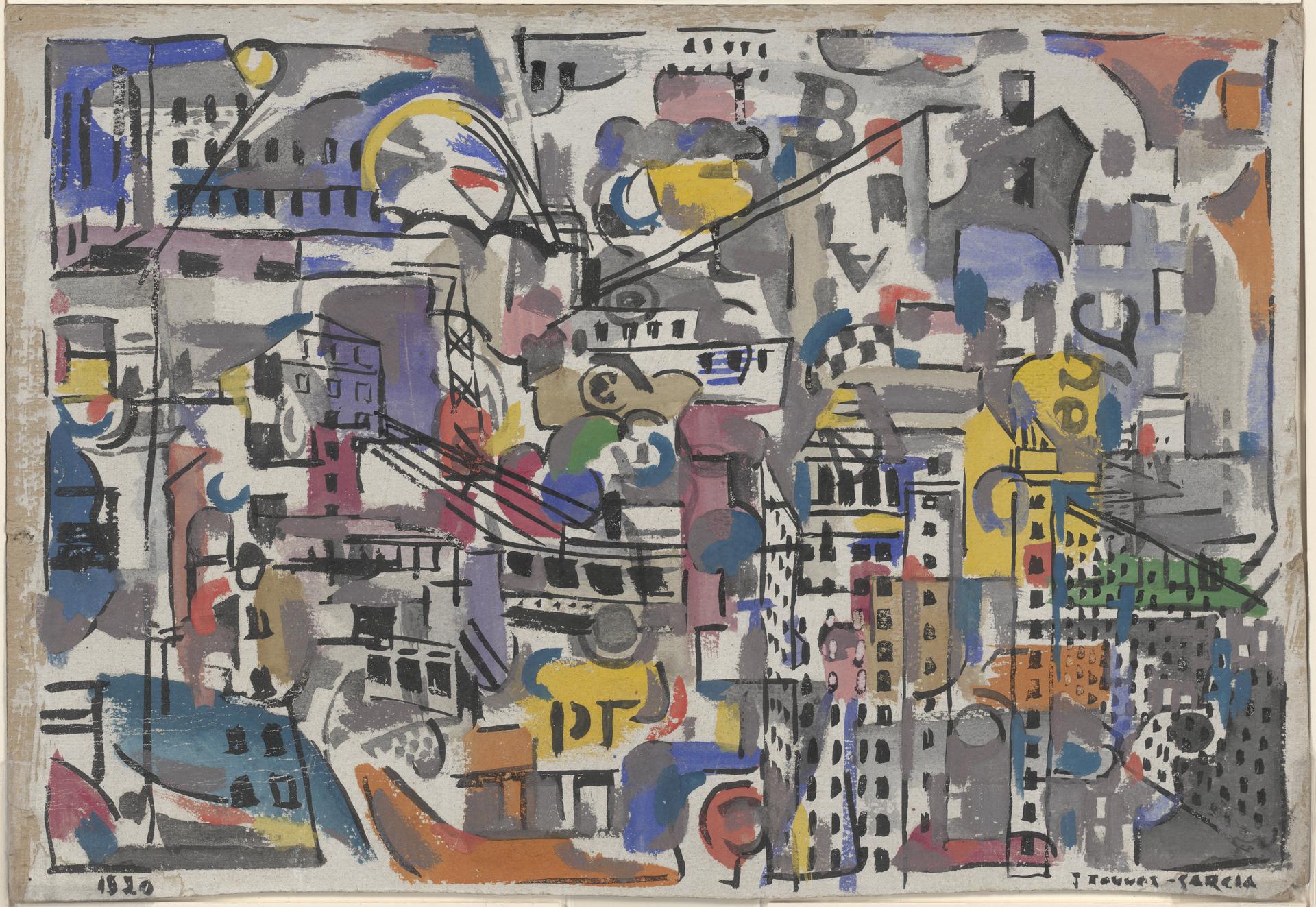

New York City: Bird's Eyes View (1920) by Joaquín Torres-García, via the Yale University Art Gallery.

In a recent issue of The New Yorker, Andrew Marantz takes a detailed look into two prominent communities in an ideological battle over AI. “Doomers” are convinced that advances in AI may spell the (possibly literal) end of the world, while the “accelerationists” believe that it’s the doomers who are threatening humanity by stifling innovation and world-improving technological progress.1 “Among the A.I. Doomsayers” (or, as it’s titled in the print issue, “O.K., Doomer”) is an engrossing and well-reported piece that I recommend to anyone interested in the present and future of artificial intelligence, but it left me feeling that important voices were missing.

After reading the piece, I dashed off an email to The New Yorker with a few of my own thoughts on the topic. I was pleased to learn that my letter was chosen for publication in the April 8th, 2024, issue. My somewhat slapdash note was edited from a rambling 300 words to a tight 199, which you can find on the New Yorker website or below, quoted in full:

Ethical A.I.

Andrew Marantz’s appraisal of two Silicon Valley camps that hold conflicting ideas about A.I.’s development—“doomers,” who think it may spell disaster, and “effective accelerationists,” who believe it will bring unprecedented abundance—offers a fascinating look at the factions that have dominated the recent discourse (“O.K., Doomer,” March 18th). But readers should know that these two vocal cliques do not speak for the entire industry. Many in the A.I. and machine-learning worlds are working to advance technological progress safely, and do not suggest (or, for that matter, believe) that A.I. is going to lead society to either utopia or apocalypse.

These people include A.I. ethicists, who seek to mitigate harm that A.I. has caused or is poised to inflict. Ethicists focus on concrete technical problems, such as trying to create metrics to better define and evaluate fairness in a broad range of machine-learning tasks. They also critique damaging uses of A.I., including predictive policing (which uses data to forecast criminal activity) and school-dropout-warning algorithms, both of which have been shown to reflect racist biases. With this in mind, it can be frustrating to watch the doomers fixate on end-of-the-world scenarios while seeming to ignore less sensational harms that are already here.

(To Marantz’s credit, he makes a passing parenthetical reference to the less sensational middle-ground of the AI culture war: “And then there are the normies, based anywhere other than the Bay Area or the Internet, who have mostly tuned out the debate, attributing it to sci-fi fume-huffing or corporate hot air.”)

The talented editors at The New Yorker deftly excised the wordy fluff from my letter, so I’ll try not to add it all back here and instead direct you to others who have written incisively on the work done to uncover and remediate harms created by AI systems today.

- A guest essay for The New York Times by Bruce Schneier and Nathan Sanders contrasts the doomers and the accelerationists (they call the latter “the warriors” and highlight a tendency to cite national security concerns which may or may not mask simpler economic motives), with the third group of “reformers” who hope to reshape AI via research, education, and regulation to reduce disparate impacts and automated exploitation. “The doomsayers think A.I. enslavement looks like the Matrix,” they write, while “the reformers point to modern-day contractors doing traumatic work at low pay for OpenAI in Kenya.”

- On predictive policing, see The New York Times’ “The Disproportionate Risks of Driving While Black,” ProPublica’s “Machine Bias” and two major stories from The Markup (2021 and 2023).

- The school-dropout-warning system referenced in the letter is the one detailed in another fantastic investigation by The Markup, which was found to have substantially higher false positive rates for minority students (though it wasn’t particularly accurate for any group, nor effective at impacting outcomes).

- Another thoughtful look at the perils of automated decision-making comes from The Markup’s look at a potentially unfair policy for the allocation of donated organs.

- Henry Farrell wrote an apt critique of the doomers-versus-accelerationists for The Economist: “This schism is an attention-sucking black hole that makes its protagonists more likely to say and perhaps believe stupid things. Of course, many AI-risk people recognize that there are problems other than the Singularity, but it’s hard to resist its relentless gravitational pull.”

- If, for some reason, you need to read even more about the sometimes horseshoe-shaped world of the doomers and accelerationists, Kevin Roose has written about both groups for The New York Times, here and here.

- To close things out, I recommend science fiction author Ted Chiang’s “Will A.I. Become the New McKinsey? in The New Yorker: “Some might say that it’s not the job of A.I. to oppose capitalism. That may be true, but it’s not the job of A.I. to strengthen capitalism, either. Yet that is what it currently does. If we cannot come up with ways for A.I. to reduce the concentration of wealth, then I’d say it’s hard to argue that A.I. is a neutral technology, let alone a beneficial one.”

-

Confusingly and sometimes frustratingly, these groups often overlap. A one-sentence “Statement on AI Risk” that compares the risks of AI to nuclear war is signed by Sam Altman (among many others), whose company OpenAI exists in a quantum superposition of preaching about the risks of AI while also making a lot of money off of the technology, and lobbies both for and against regulation. Several OpenAI employees quit the company in part due to safety concerns in order to form their own AI behemoth, Anthropic, where employees agonize daily over the implications of their work. Marantz quotes “rationalist” blogger Scott Alexander on how the factions have become strange bedfellows: “Imagine if oil companies and environmental activists were both considered part of the broader ‘fossil fuel community.’“ ↑