Teaching machines to understand

Illustration from the Life publication of Vannevar Bush's "As We May Think" (1945), via Google Books.

Last year, I saw that Ignite was holding a night of AI talks at Betaworks, a startup incubator and co-working/event space that happens to be about a block from my office, and they were soliciting pitches for talks. I’d recently gotten back from SIGIR in D.C. and my mind was still spinning with new ideas, so I haphazardly filled out the Google Form with a one sentence pitch for a talk about how “AI is embeddings” [sic].

A few days later I found out that I’d been selected to speak and that the event was in a week. At this point, I took a closer look at Ignite’s signature talk format: 5 minutes, 20 slides, 15 seconds per slide—what had I gotten myself into? Nonetheless, I decided it was a good opportunity to shape some vague thoughts I’d been having into something coherent and to practice public speaking, so I got to work on my slides and drilled my talk over and over.

The event ended up being a huge success. Lots of people showed up to hear ten speakers give a really wide-ranging selection of AI-themed talks on psychedelic art, cyborg teams, canine personhood, and more. While I learned that I apparently speak a bit quietly (whoops), I didn’t panic and forget my notes like I’d feared I would—in fact, my talk went really well!

You can watch the video recording of the talk or read an adapted and slightly expanded version below.

“Teaching machines to understand.” Right off the bat I’m breaking a rule that bothers some people in the AI space: anthropomorphizing algorithms, suggesting that computers do things that humans do like learn and understand.

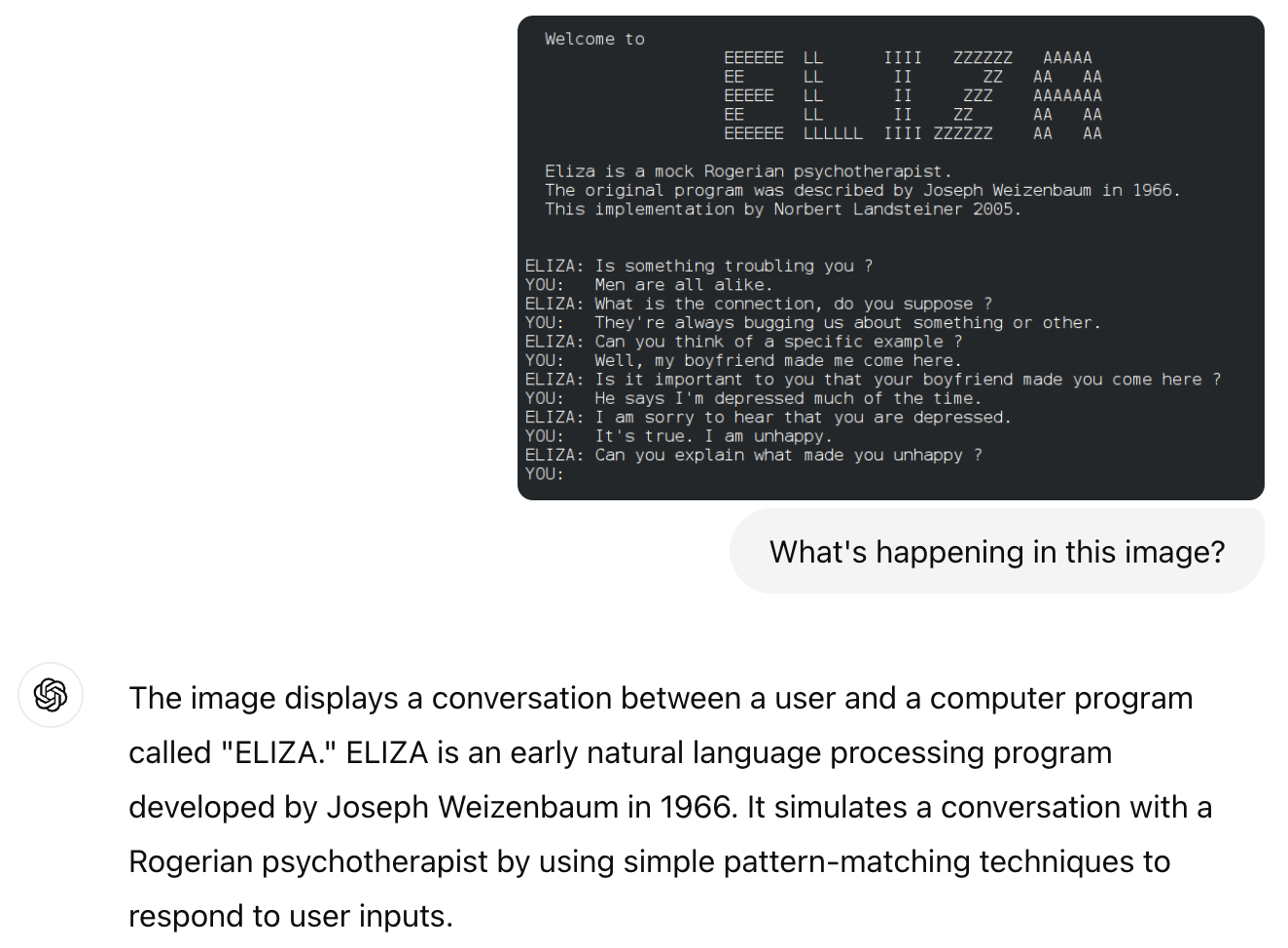

In fact, we’ve been treating computers like people for as long as we’ve had computers. In the 1960s, MIT professor Joseph Weizenbaum developed an early chatbot called ELIZA which could behave like a therapist. Here’s a conversation I had with a modern re-implementation of ELIZA:

> Hello, I am Eliza. I'll be your therapist today.

* I'm nervous about giving a talk

> How long have you been nervous about giving a talk?

* Since a few days ago. The talk is next week.

> Can you elaborate on that?

* I only have a few days to prepare, and the format is tricky.

I'm worried I'll forget what I want to say.

> Do you believe it is normal to be worried you'll forget what you want to say?

You get the idea. While ELIZA used fairly primitive logic, Weizenbaum was surprised at the way users quickly felt that the program understood them not just linguistically but emotionally. Weizenbaum called this experience “powerful delusional thinking”—now we call it the ELIZA effect—but sixty years later everyone and their mother is having similar uncanny experiences with ChatGPT: conversations that feel meaningful, fluid, and human.1

I can send ChatGPT a screenshot of a conversation of ELIZA and ask “What’s happening in this image?” and it’s able to respond with an explanation of exactly what ELIZA was.

Surely this is closer to some notion of “understanding,” but what happened in the decades between ELIZA and ChatGPT to make it possible?

Let’s back up to 1945. Scientist and engineer Vannevar Bush published an essay called “As We May Think” where he described something called the “memex,” a memory-expanding device that would store all of your information and ease the process of retrieving it in a more logical manner. There’s a lot of associated gadgetry described to make this possible, but I’m more interested in the underlying paradigm Bush describes to enable navigating through this new massive data store (emphasis mine).

Our ineptitude in getting at the record is largely caused by the artificiality of systems of indexing. When data of any sort are placed in storage, they are filed alphabetically or numerically, and information is found (when it is) by tracing it down from subclass to subclass. It can be in only one place, unless duplicates are used…

The human mind does not work that way. It operates by association. With one item in its grasp, it snaps instantly to the next that is suggested by the association of thoughts, in accordance with some intricate web of trails carried by the cells of the brain…

Man cannot hope fully to duplicate this mental process artificially, but he certainly ought to be able to learn from it. … Selection by association, rather than indexing, may yet be mechanized.

“The process of tying two items together,” Bush wrote, “is an important thing.”

Modern note-taking software and “personal knowledge bases” let us do just that. Obsidian maintains a graph of your notes, connecting the notes which link to each other. The video below demonstrates how you can browse that graph to see which articles are connected in the Obsidian Help site.

The problem with this is that manually linking together notes is difficult and tedious—I should know, I’ve tried. It’s really valuable when I remember to tie together things like conference talks on similar concepts or with overlapping authors, but most of the notes in my Obsidian database are unlinked orphans, or else linked only to a single hub that lists out a bunch of related pages.

Worse yet, manual links don’t solve the issue of discovery. I can find information I’ve previously filed away as related, but how can I find new topics similar to the ones I care about?

Enter search. Our massive accumulation of digital documents has necessitated the development of tools for retrieving needles from the information haystack. Early digital documents were primarily textual so early search tools relied on term matching, which is useful when you know exactly what you’re looking for but not so great when you’re trying to explore or if you can’t quite remember the right term. When I was trying to recall Bush’s phrase “associative indexing” in “As We May Think,” my searches for “link,” “relation,” and “search” didn’t cut it.

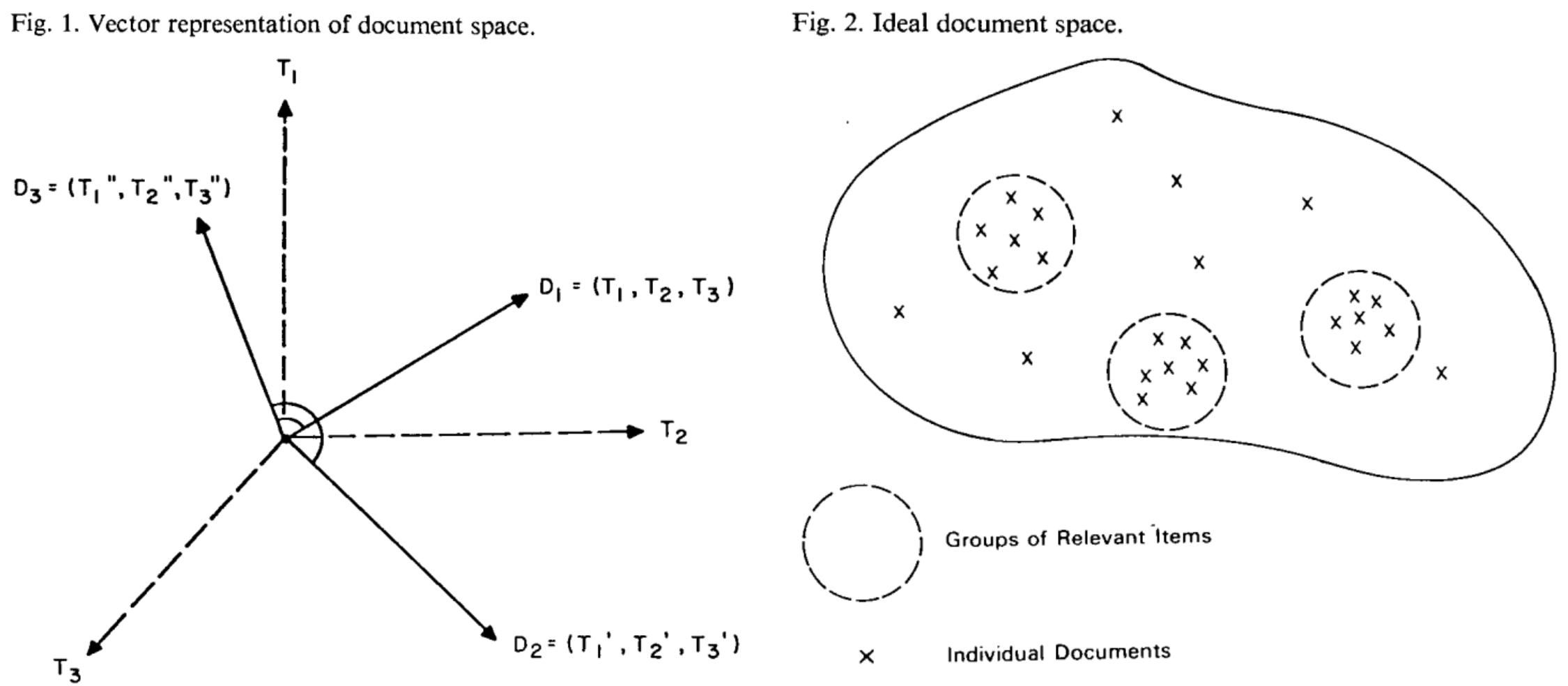

Once again we reach back into history for a foundational insight, this time to 1975 for Gerard Salton, who pioneered the vector space model of indexing.2 Remember how Bush specifically bemoaned the artificial nature of alphabetized or numerical organization of documents? Salton’s insight was to represent documents as vectors—basically lists of numbers, similar to coordinates in space—such that documents which are related to each other have vectors which are near each other in the vector space.

Two figures from Gerard Salton's "A vector space model for automatic indexing" (1975).

Early methods of generating these vectors relied on enumerating specific terms in the document (so it’s no wonder that early search methods relied on term matching). The simplest variant is the bag-of-words vector, where each position in the vector corresponds to a specific word in a predetermined vocabulary. The number in that position is 0 if the term does not appear in the document and 1 if it does—or it could be set to the number of times the term occurs, or some other weighting scheme.

This paradigm of term-based vectors (also called lexical vectors, or sparse vectors since they contain many zeros) survives in updated and augmented forms today, in part because it’s highly effective but also because we’ve figured out how to make it insanely fast, which is important for web-scale search applications. But more recently an alternative approach, with its own advantages and disadvantages, has flourished: rather than deterministically generating vectors based on terms in a document, we can learn them by training a statistical model to capture patterns in massive text corpuses.

One of the best-known versions of learned vectors came in 2013 with word2vec, which fittingly learns vectors for individual words. Given a sentence from a large text dataset, for instance “the fluffy dog barks loudly,” we should be able to remove a word from that sentence, then predict which word was missing. In other words, a very simple model should be able to take the vectors for “the,” “fluffy”, “barks,” and “loudly,” and assign a high probability to the missing word being “dog” (and correspondingly a low probability to words thta don’t fit, like “ingenuity”). Since we start with random vectors, this model will perform terribly at first. But the trick is that each time we try it on a new sentence we nudge the vectors very slightly so that they’ll give a slightly more correct answer next time. This is why we say that the vectors are “learned.”

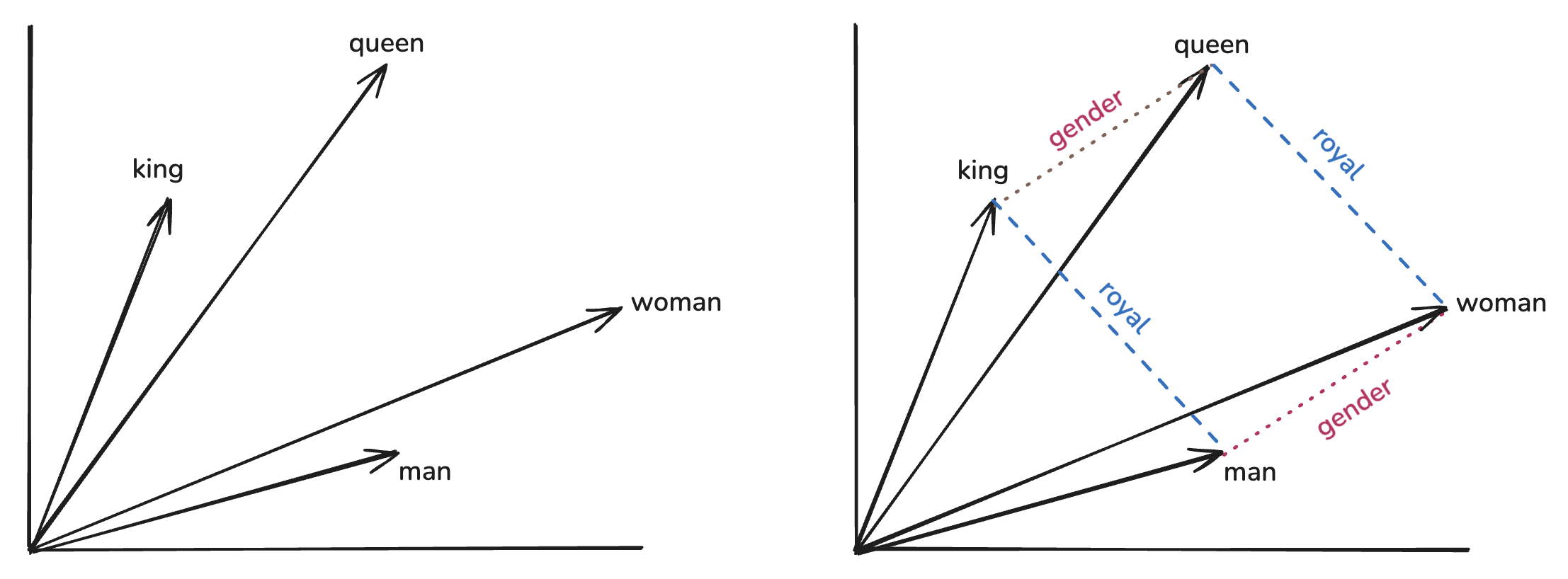

Learned embeddings capture relationships between words.

What exactly do they learn? One famous property of these learned vector spaces is that they capture analogies. As shown above, taking the vector for “king” then subtracting “man” and adding “woman” gets us a vector close to the one for “queen.” This is a cool trick, but the more important property is that synonymous and related terms end up near each other in the space: the nearest neighbors to “happy” are “glad,” “pleased,” and “ecstatic,” while the nearest neighbors to “dog” are “dogs,” “puppy,” and “pit_bull.” We say that the vector space captures “semantic similarity,” which was Salton’s goal from the start.3

In his linear algebra textbook, Sheldon Axler remarks: “So far our attention has focused on vector spaces. No one gets excited about vector spaces.” If the guy who wrote a book on the topic finds this all a bit dry, I’ll forgive you for thinking that perhaps I’ve lost the point. But the fact is that these vectors (often called “embeddings” in modern AI contexts) serve as the fundamental building block for all the ways we use AI to understand. We use it everywhere.

For example, you can see how we’ve begun to free ourselves from exact term matching as well: if representations for similar words (and by extension, similar documents) are located near each other, then search queries no longer have to contain exactly the right word. A query that captures the same meaning will still land near the relevant results.

But we’re not limited to learning vector embeddings for just words or text documents—we can learn them for just about anything, so long as we have a dataset that allows us to say which things are similar and thus should have nearby vectors.

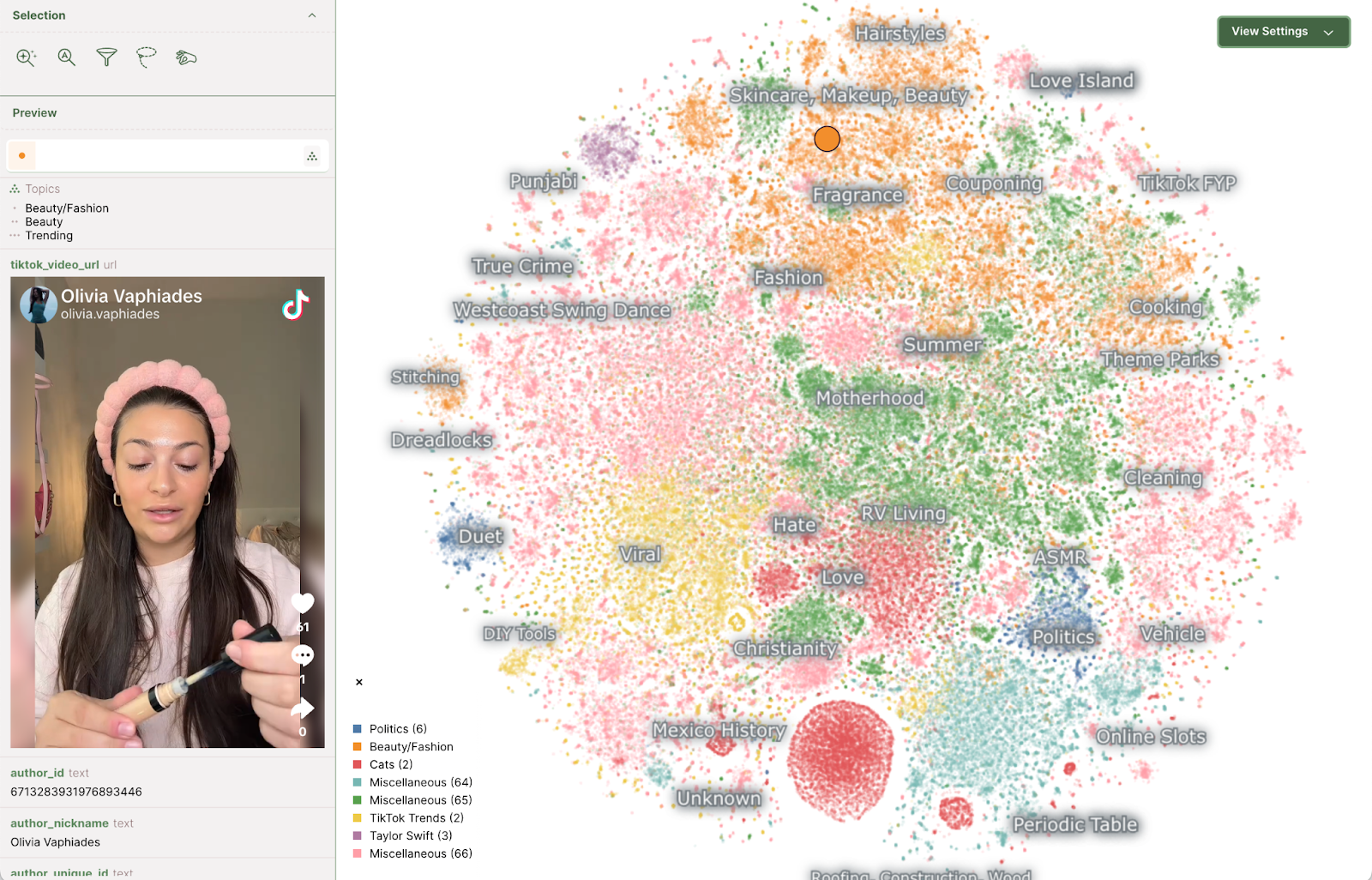

Embeddings of trending TikTok videos from Nomic Atlas.

We’re able to navigate massive datasets in ways that are useful and pleasing to humans. Nudge vectors together for movies that were watched by the same people and you’ll learn a recommendation model like the one that powers your Netflix homepage. In fact, learning movie embeddings for recommendation predates the Word2Vec paper and was a key development for researchers working on the Netflix Prize, a competition to design a recommendation system for Netflix.

The Netflix prize didn’t contain any metadata about the movies (director, year of release, genre), relying only on knowledge of which users liked the same movies—think “people who liked this also liked that.” But in reality we often do have additional information available and we can use it to learn even more robust representations, especially when we leverage multi-modal data like images or audio. Etsy rolled out a “search by image” feature which finds items on Etsy which are “visually similar” to the photo the user provided. Their model aimed to capture both categorical accuracy (an image of a doll should return doll products) as well as visual similarity (an image of a green doll should return products that are also green). Accomplishing this required a model which leverages both the image data and product categories provided by Etsy sellers.

A query image and the top results when searching in two different vector spaces using Etsy's search by image models.

I argue that this is a form of understanding because we’ve narrowed the gap between the qualities of data that we care about (I want a movie that’s similar to Die Hard or a doll that looks like the one I had as a kid) and the representation of that data that our computers understand—we’ve built digital reprsentations that encode semantic qualities that are meaningful to humans.4 The photos I take on my phone aren’t just a collection of pixels anymore because I can search “antiques” in Google Photos and it’s able to return to me photos of weird stuff I’ve found in antique shops through the years.

The experiences we’ve built with this technology can feel straight out of science fiction, like snapping a quick photo of a bookshelf, selecting one of dozens of spines, and then instantly pulling up a website to buy the identified book. It might not be as showy as the computers from Minority Report or Blade Runner, but it’s something that simply wasn’t possible not so long ago and now it barely registers as novel.

The 2014 xkcd comic “Tasks” demonstrates how the line between easy and difficult technical tasks can be subtle. Identifying whether a photo was taken in a national park is easy: “Sure, easy GIS lookup. Gimme a few hours.” Identifying whether the photo is an image of a bird is hard: “I’ll need a research team and five years.” One month later, engineers at Flickr had built a prototype to do just that. Ten years later, you can buy binoculars which identify the bird species you’re looking at.

We’ve undoubtedly fulfilled some of the goals Bush envisioned with the memex—organizing data based on its meaning and relationships to other information—but I think we should keep pushing the envelope and dreaming up ways to build machines which better map to the way that we think about the world and which understand what we mean and desire. We’ve come a long way from needing to try out different keyword combinations to find what we’re looking for online, but as long as I still have to learn “best practices for prompt engineering”—as long as we still have adapt our behavior and our inputs in order for computers to understand us—then there’s still room to improve.

So I’m exited for us to keep pushing towards the next bird-identifying binoculars: tasks that seem hard today, that require even richer notions of machine understanding. What points would make a persuasive argument for my thesis? What chemicals would be able to treat this disease? What decisions could help me flourish?

-

In the course of my writing this blog post, I’ve come across a few more alarming ELIZA effect anecdotes. OpenAI released their new GPT-4o model and dedicated a section in the model’s system card to “anthropomorphization and emotional reliance,” noting that “During early testing […] we observed users using language that might indicate forming connections with the model. For example, this includes language expressing shared bonds, such as ‘This is our last day together.’”

A more chilling moment comes from an article Laura Preston wrote for n+1. At an AI convention, the author witnessed the CEO of a veterinary chatbot explain how their bot had convinced a woman to euthanize her pet dog: “The CEO regarded us with satisfaction for his chatbot’s work: that, through a series of escalating tactics, it had convinced a woman to end her dog’s life, though she hadn’t wanted to at all. ‘The point of this story is that the woman forgot she was talking to a bot,’ he said. ‘The experience was so human.’”

The models keep getting better, and the impact of anthromoporphization grows stronger. ↑ -

Gerard Salton was referenced constantly at SIGIR—the conference’s biggest award is even named after him. The vector space model remains fundamental to information retrieval, with many of the field’s contributions amounting to new ways of crafting the vectors. It was the conference’s ubiquitous throughline of vectors—dense, sparse, learned, hand-crafted—that inspired me to take this on as a topic for a talk. ↑

-

You can look at more nearest neighbors with this web app, and play a Worldle-clone based on semantic similarity at Semantle. ↑

-

The Wikipedia article on the semantic gap contains a great illustrative example: it’s very easy to find a document on my computer containing the word

funny, but very difficult to find an image on my computer that I will find funny. Our digital representations have historically offered poor allowances for us to navigate them in ways that we might like to. ↑